How should the quality of a system be measured? More particularly, how should quality be ascertained when the system is complex and incorporates important components not readily evaluated by quantitative means?

Systems’ thinking has evolved since the surge of interest during World War II. We now expect systems to include artifacts, communications, activities, events, experience, policies, procedures, programs and more as specifically designed components. It would appear that systems are a lot “softer” than they were sixty years ago, but that isn’t the case. It is just that, instead of ignoring the softer elements, we now recognize and deal with them in our systems. The broader reach has allowed far more subtle applications, but it comes with a need for more sophisticated means of evaluation and recognition that, as much as we might not like it, everything can’t be measured in objective, quantitative terms.

That is not necessarily a loss. In fact, for evaluation purposes, an absolute numerical value is often not what we want. What we want is a relative value that establishes better/worse, good/bad comparisons of systems vs other systems, systems against an ideal, or systems against their evolutionary antecedents.

The kinds of systems of interest now often have human components who perform important functions, functions that might be performed by machines if we knew how to construct them, but are not—exactly because of the adaptability human beings bring to their tasks. They are special—and more difficult to evaluate—because they are not machines. People require sensitive scrutiny because they are complex. Subjective evaluation can respond more appropriately when many factors must be integrated without the certainty of objectivity. Qualitative modeling fits well when systems are complex and composed of hard and soft elements.

In this article I will suggest a method for evaluating systems that takes advantage of the concepts introduced in previous articles to provide the subtlety and thoroughness that today’s complex systems require.

Form of the Criteria

Several years ago, after one of my workshops explaining the Structured Planning process to a large company, one of the executives came up to me with an interesting question. He asked, “Can Structured Planning be used to evaluate systems? We have a complex system product, and we don’t honestly know how well it is performing and— worse—we don’t have a good way to compare it with our competitors’ products!” I had to tell him that Structured Planning had not been developed for that purpose, but it got me thinking. A few years later, I began to develop that capability as another tool in the Structured Planning toolkit.

Two forms of criteria were already being created in Structured Planning: Defining Statements and Function Structures (see my articles: Goals and Definitions and Covering User Needs). Defining Statements are high-level policy statements taking positions on issues pertinent to a system under development. A Function Structure is a hierarchical structure identifying and organizing specific Functions the system must perform. Either could be used as criteria against which a system could be measured. Either could provide detailed, granular results as well as results aggregated hierarchically for higher level evaluations.

Hierarchically organized criteria don’t have to be used, but they bring the extra value of levels of generality. For criteria and system descriptions as we will use them here, the mechanism forming the hierarchy is simply inclusion. Higher level clusters include those below that are strongly related. Nothing new is added except a name descriptive of the more general grouping the cluster covers.

Form of the Object to Be Evaluated

In traditional forms of multi-criterion evaluations (e.g., criterion function analysis) the object of evaluation is usually a single entity—a product, service, program or other object of interest. Because the purpose of evaluation is often comparison and choice, several entities are usually evaluated as alternatives or options with different features. For each criterion, the relevant features of the entity are called up and a score given for the entity based on the unstated, but recalled or looked up qualities concerned.

This can be the form used for system evaluation—the system represented as a single entity—but it need not be. There is much to be gained by treating the system description in the same hierarchical, multi-variable way as the criteria. If this form is chosen, the system is broken down progressively from major components and divisions of them to a level comfortably specific in functionality. These “System Elements” are evaluated against the full range of equally specific criteria. The System Elements may have already been established if the system was developed using Structured Planning (see my article Shaping Complex Ideas).

One of the advantages of this description is greater precision in establishing exactly what is being evaluated against the criteria. Another advantage is the ability to score each System Element against all of the elements of the criteria. It is not unusual that an elemental component of a system may help the system to meet criteria for which it was never intended. This crossover system value can be substantial and needs to be detected and exploited even if it takes formal system evaluation to do it!

Scoring

The enumeration of individual elements of both criteria and system provides two lists that can be scored against each other in a matrix format. Matrix scoring adds time-consuming work to the evaluation process though, so there should be reciprocal value added, and the scoring process should be as transparent as possible to minimize the effort.

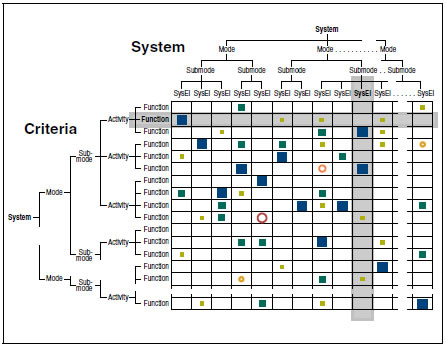

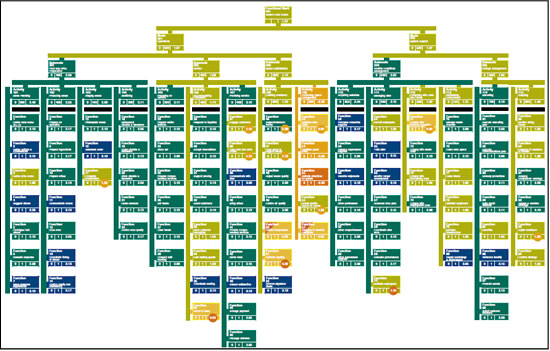

The value added comes from the opportunity to consider how each System Element contributes (or could contribute) to the full range of criteria. Not only does this introduce many new viewpoints from which to see elements of the system, it opens the way to calculating a composite score for each System Element reflecting its performance across the whole range of criteria. And it enables a similar aggregate score to be calculated for how the whole system responds to each criterion. A typical matrix is shown in Figure 1 with paths outlined for the calculations for a single Function criterion and a single System Element.

|

Figure 1 –

A system characterized by its System Elements evaluated against criteria in the form of a Function Structure. Graphic rendition of scores shows how individual Functions and System Elements are evaluated for the whole system (see Figure 2).

To make the scoring as transparent as possible, a common scale is used that is qualitative and intuitively easy to grasp. Because a system component can (unintentionally or unavoidably) make it difficult for the system to meet a criterion’s requirements as well as contribute to achieving its goals, the scale is bi-directional with negative as well as positive sides. At its neutral center, a System Element has no effect on the criterion. Positive rankings reflect contributions to satisfying the criterion; negative rankings register qualities contributing to the prevention of satisfaction.

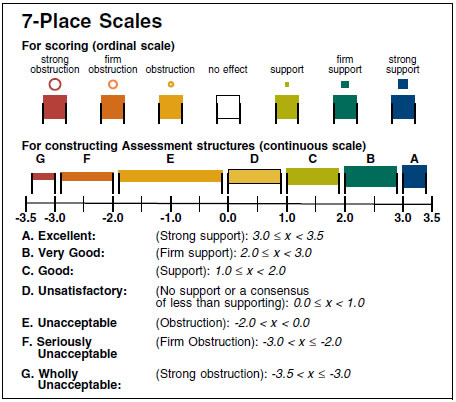

The scale can be set to a level of refinement appropriate to the system in question and the criteria to be used, the simplest being a neutral value with one level of positive support and one of negative obstruction—any element supports, obstructs or has no effect. Typical scales, though, have two or three positive and negative levels, reflecting evaluators’ desire for higher levels of resolution. In my experience, the three-level scale is easiest to use. Figure 2 shows such a scale.

|

Figure 2 –

Scales for scoring (Figure 1) and creating the blocks and links of Assessment structures (Figures 4 and 5). Link color and thickness follow the parent block from which the link descends.

Although this kind of a scale is subjective, judgments are not entirely restricted to the subjective. Where a criterion can be objectively evaluated, a conversion table can be created to convert objective values to positions on the qualitative scale.

Calculating Values

The calculated value for any System Element will be a function of the individual scores for the element against all the lowest level criteria. The same will be true for a calculated value for any individual criterion—it will be a function of the scores for it against all of the System Elements. When combining the individual scores, the algorithm for calculation should respect two important considerations:

First, it should produce a composite value consistent with the scoring scale, no better than slightly more than the maximum possible on the scale; no worse than slightly less than the minimum possible on the scale. In this way, calculated scores will be comparable and intuitively compatible with the scale values used in the original scoring.

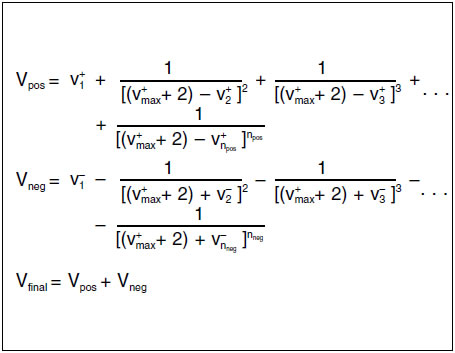

Second, it should recognize the concept that a criterion need only be fulfilled once; multiple contributions are desirable (the system model again), but a single instance of support is enough, the higher the better. If scores are averaged as a simple means of accumulation, that principle can be seriously violated. For example, a set of scores on a -3 to +3 scale such as {+3, +1, +1, +1} would produce an average of 1.5, completely obscuring the fact that a single System Element exists that maximally fulfills the criterion’s requirement. To avoid that difficulty, the algorithm of Figure 3 employs a series model that adds values in descending value beginning with the greatest (or least when considering negative scores) and builds each succeeding score into a term progressively smaller. The resulting calculated score is asymptotic to the greatest score received plus .5 for its supporting, positive values and the lowest score received minus .5 for any negative values. The algorithmic result for the four value example is 3.08, making it clear that the criterion is very well fulfilled and that there is more than one contributing element.

|

Figure 3 –

Calculation Algorithm:

1.

For each criterion or System Element, sort positive scores, v+, highest first; sort negative scores, v±, lowest first.

2.

Calculate composite values for the positive series and negative series.

3.

Combine composite values for the final score.

Conveying Results

So far, so good. The evaluation process allows systems to be described hierarchically with a full range of components—physical, procedural and organizational—included as System Elements. Criteria for evaluation are also described hierarchically as policy positions to be satisfied or Functions to be performed. Scoring is performed using a bi-directional qualitative scale with a negative side, positive side and neutral center, and an algorithm for combining scores enables values to be calculated in the range of the scoring scale for each System Element and each criterion. What remains is to present the results in a way that allows both forest and trees to be seen.

Presenting assessment results as hierarchies is a good way to cover both wide and narrow focus. It ties results directly to the input hierarchies and allows conditions to be seen in general or in detail at a glance. And, it opens the door to weighting as a means to incorporate emphasis. Groupings of criteria and system elements can be given importance that reflects the context of a particular system’s use and the viewpoint of the analyst.

Figures 4 and 5 split a Functional Assessment for a restaurant. Blocks at the bottom of the structure (below Activity blocks) represent Functions. At higher levels, blocks represent increasingly more inclusive groupings of Functions as Activities, Sub modes and Modes of the restaurant’s operation. Block color is determined by the system’s performance in fulfilling the Function or Functions associated. Along with identifying information, each block shows a criticality factor for how critical satisfactory fulfillment of its function is to the system, a weight for its importance relative to others in its parent cluster, and the calculated performance value. Blocks above the lowest level accumulate the values of those below, the block at the top presenting total Functional Merit, a single value summing up the full assessment for the functional criteria. From a quick examination, it is evident that Functions under Activity 109, Controlling Space Arrangement, need to be better served.

Click image to enlarge. Click image to enlarge. |

Figure 4 –

A Functional Assessment structure for a restaurant. Functions below the Activity level belong to the Activity above them. Blue blocks are excellently supported by System Elements; dark green blocks have very good support; light green blocks have good support. Yellow blocks are unsatisfactorily supported; light orange blocks have unacceptable obstruction; dark orange blocks have seriously unacceptable obstruction. Calculations and presentation produced using the computer program SYSEVAL.

A similar structure can be produced for System Assessment, and additional analyses can be applied (some visible in the figure). For an extended, detailed discussion of theory and algorithms, see Evaluation of Complex Systems by Charles L. Owen, Design Studies Vol. 28 No. 1, (January 2007): 73-101. A .pdf version of that paper modified for this format can be downloaded from www.id.iit.edu/141/. Additional discussion of system evaluation and other tools in the Structured Planning toolkit can be found in the textbook, Structured Planning, Advanced Planning for Business, Institutions and Government, also available through the same Publications section of the web site.

Final thoughts and Possibilities

The Functional Assessment example of Figures 4 and 5 is for a restaurant. When we think of evaluating restaurants, we usually think of stars or diamonds and judgments about the quality of food, service and ambiance. But from the viewpoint of the planner, designer, manager or innovator, those evaluations miss the point because it is the system creating the dining experience that is the proper subject of evaluation—the operations that produce the meal, its setting and enjoyment. Innovating a better dining experience means innovating the many system components of the restaurant. Not all planning and design problems fit this model, but many do, and it behooves the innovator to think carefully about the proper focus. Is it the product that needs evaluation or the system that creates the product?

That said, some of the seemingly more intractable problems of evaluation today may well be susceptible to this kind of system evaluation. Among them is the problem of improving our schools. Teachers are under intensive scrutiny today, but much of the evaluation is being done with questionably appropriate measures of student test results. Perhaps the educational system should be thoughtfully modeled as a Function Structure to include the multiple functions involved besides drilling students on testable facts in the classroom. With an archetypal hierarchical system model to evaluate against a functional model of the teaching/learning process, individual teachers, staff members and administrators could be evaluated as well as component parts of a school’s operational system. This strikes me as a procedure at once more insightful and fair than the kinds of student test-score-based methods now in use.

Other kinds of organizations might similarly benefit where the evaluation of individual performance is critical. Sports teams are an example. Players usually have multiple skills they must perform and more they may be able to perform (executing different Functions in different Activities of different Modes). And less obvious team and personal skills are also very important. Actions on the field, important as they are, are not the only functions that should be taken into consideration—leadership in the clubhouse, ability to teach and share experience, morale-building good humor, relations with the public and civic engagement are among other less tangible skills also important and evaluatable.

The key to useful evaluation for a planner is insightful information about how well components of the system perform as a system. To obtain that full picture of system performance, thorough models of both criteria and functionality are required, and evaluation has to track performance across the system’s full operational domain.